What is CPU Cache? Understanding L1, L2, and L3 Cache in Depth

If you’ve ever wondered how modern processors handle data at blistering speeds, the answer lies not just in the CPU’s clock frequency but in a small, ultra-fast memory called CPU cache. This cache is a critical component in every computer, acting as a high-speed buffer between the super-fast CPU and the slower main memory (RAM). By storing frequently accessed data and instructions close to the processor cores, it dramatically reduces data access latency and is a fundamental pillar of CPU performance.

Understanding the hierarchy of L1, L2, and L3 cache is key to grasping how modern CPUs efficiently manage data. This article will break down the role of each cache level, its impact on your computing experience, and why this architecture is essential for everything from loading an application to advanced gaming.

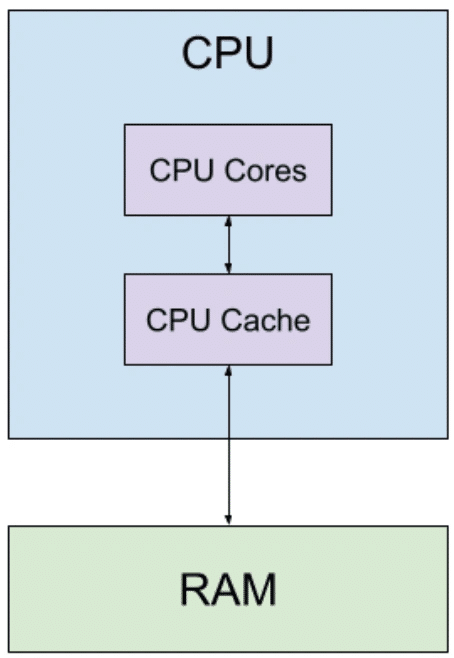

A CPU cache is a tiny, ultra-fast memory built directly into the processor. Its purpose? To store copies of the most frequently used data so the CPU can access it instantly, without waiting for slower system memory (RAM). This is essential because even the fastest RAM is hundreds of times slower than the CPU’s internal operations.

Without cache memory, the processor would spend most of its time waiting for data rather than processing it.

Why CPU Cache is Necessary: Bridging the Speed Gap

The primary purpose of CPU cache is to solve a major bottleneck in computing: the significant speed difference between the CPU and RAM. Modern processors can execute billions of instructions per second, but often need to wait for data to be fetched from the main memory, which is much slower.

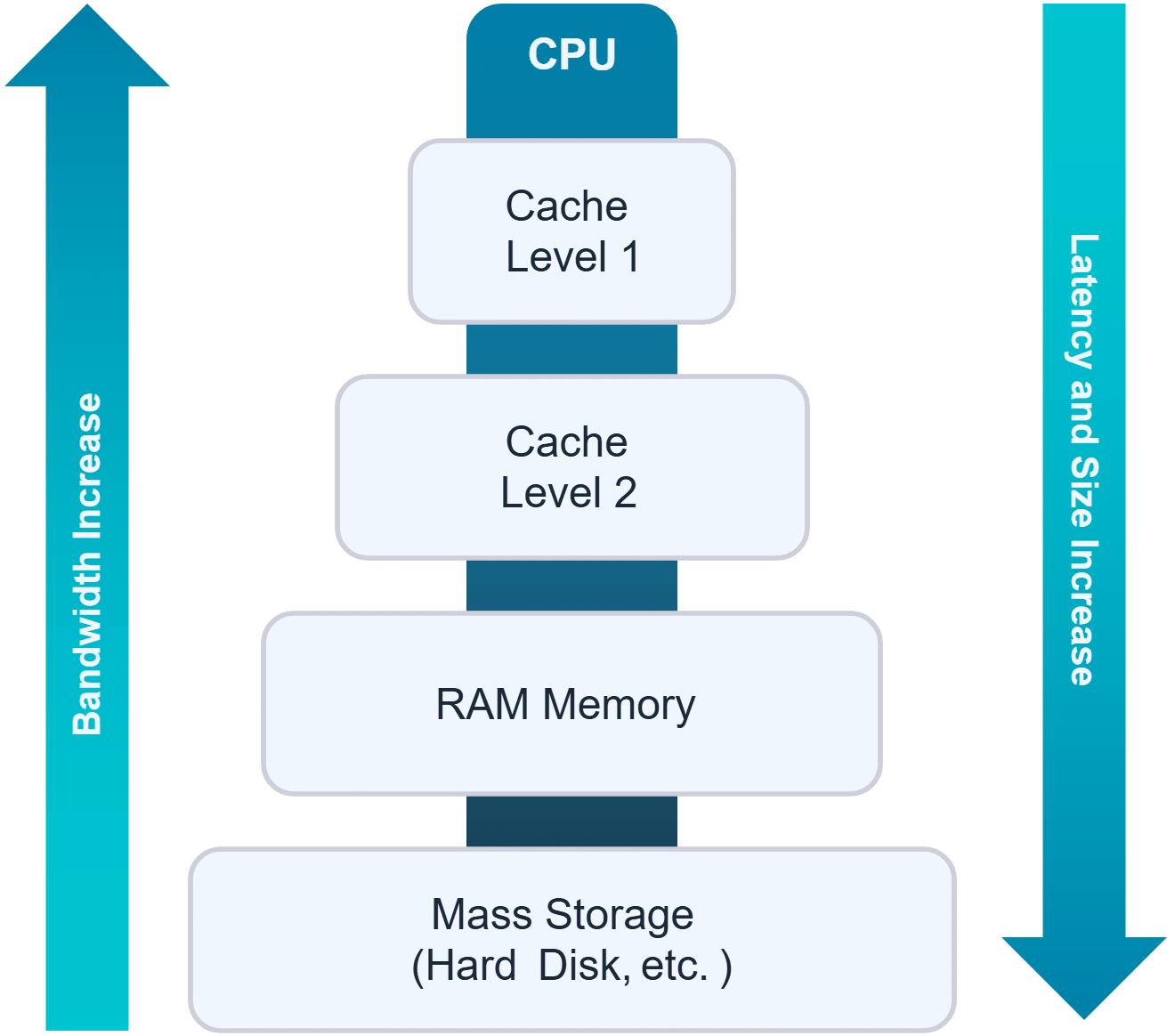

CPU cache memory is built using Static Random-Access Memory (SRAM), which is much faster than the Dynamic RAM (DRAM) used for main memory. However, SRAM is also more expensive and takes up more physical space on the chip. To balance cost, size, and speed, CPU designers use a strategy called a cache hierarchy. This multi-level approach ensures that the most critical data is stored in the smallest, fastest cache, while less frequently used data resides in larger, slower caches, preventing the CPU from idling while waiting for data.

The CPU always checks the cache first before reaching out to RAM or storage. This is known as the memory access hierarchy, designed to minimize latency (the time it takes to get data).

What Are The Three Levels of CPU Cache: A Hierarchy of Speed

The cache hierarchy is typically structured into three levels: L1, L2, and L3. Each level serves a distinct purpose and offers a different balance of speed and size. The following table provides a clear comparison of these levels.

How CPU Cache Works: The Basics

When the CPU needs data, it follows a process:

- Cache Lookup: It first checks if the required data is already stored in cache.

- Cache Hit: If found, the CPU retrieves it instantly.

- Cache Miss: If not found, it fetches the data from the next level (L2, L3, or RAM).

- Replacement: The cache may replace old data with the new one, using algorithms like LRU (Least Recently Used).

This design ensures the CPU is fed with data as quickly as possible, avoiding idle cycles.

How CPU Cache Affects Real-World Performance and Gaming

The size and efficiency of the CPU cache have a direct impact on application performance, especially in tasks that require rapid access to large datasets.

- Gaming: Games, particularly open-world titles with vast, dynamically loaded assets, benefit immensely from a large cache. A larger L3 cache, like the 96MB found on AMD Ryzen 7 7800X3D and 9800X3D processors with their innovative 3D V-Cache technology, can lead to higher and more stable frame rates by reducing stuttering. This happens because the CPU can quickly access texture, object, and geometry data without constantly waiting for RAM.

- Professional Applications: Tasks like video editing, 3D rendering, and data analytics involve processing massive amounts of data. A larger cache allows the CPU to keep more of this active data close at hand, leading to faster rendering times, quicker file compilation, and speedier data processing.

For more details on how these components work together, see our pillar page on CPU Fundamentals.

Deep Dive into Cache Levels: L1, L2, L3

Modern processors use a multi-level cache system to balance speed, size, and cost.

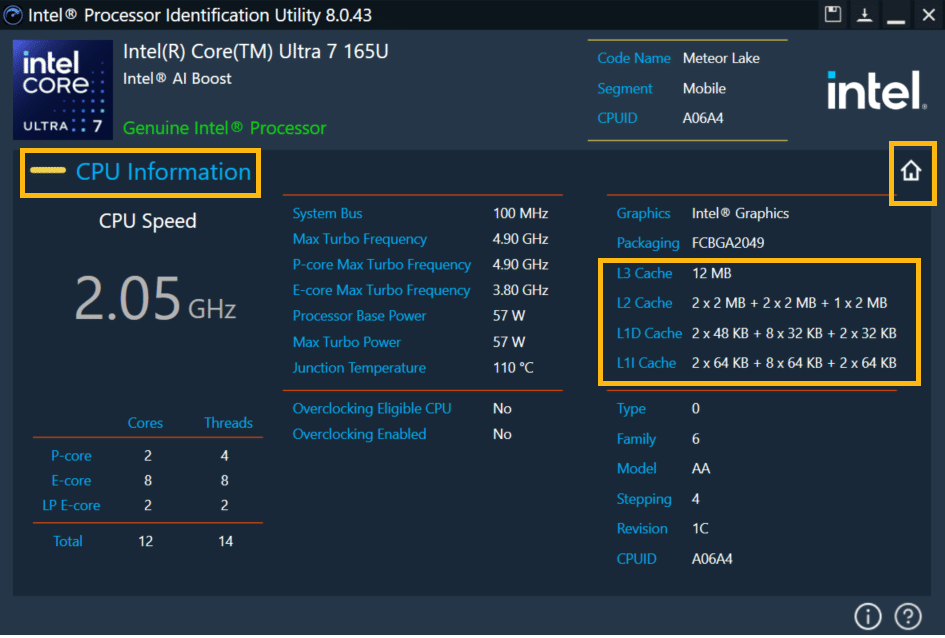

L1 Cache – Level 1

- Purpose: Immediate access to critical data and instructions.

- Location: Inside each CPU core.

- Speed: Fastest cache (typically 1–4 clock cycles latency).

- Size: Smallest (usually 32KB–128KB per core).

- Structure: Often split into:

- L1i (Instruction Cache): Stores instructions.

- L1d (Data Cache): Stores actual data.

Because it’s directly next to the core’s execution units, L1 cache has almost zero delay but limited capacity.

L2 Cache – Level 2

- Purpose: Backup to L1; holds more data but is slightly slower.

- Location: Either per core or shared between a few cores.

- Speed: 3–10 clock cycles latency.

- Size: 256KB to 2MB per core.

L2 cache acts as the second layer — if the CPU misses L1, it checks L2 before going to L3.

L3 Cache – Level 3

- Purpose: Shared cache that improves communication between cores.

- Location: Shared across all cores on the same CPU die.

- Speed: 10–20 clock cycles latency.

- Size: 4MB to 64MB or more.

L3 cache ensures multi-core efficiency — when one core processes data, others can access it quickly if needed.

Example: How CPU Cache Helps in Real Tasks

Let’s consider a game running on your PC:

- L1 Cache might store current frame rendering instructions and player input data.

- L2 Cache holds physics calculations or nearby game logic.

- L3 Cache contains shared textures, AI states, and world data.

Without cache, the CPU would constantly fetch data from RAM, leading to frame stutter, lag, and lower FPS.

Beyond the Basics: Cache Policies and Coherency

The operation of the cache is managed by sophisticated algorithms and policies that ensure efficiency and correctness :

- Replacement Policies: When a cache is full and new data needs to be loaded, a replacement policy decides which old data to evict. Common algorithms include Least Recently Used (LRU), which removes the data that hasn’t been accessed for the longest time.

- Write Policies: These policies determine how data written to the cache is synchronized with main memory. A write-through cache immediately updates main memory, while a write-back cache marks the data as “dirty” and updates main memory only when the cache line is evicted, offering better performance.

- Cache Coherency: In multi-core processors, each core has its own L1 (and often L2) cache. Cache coherency protocols (like MESI) ensure that when one core modifies a piece of data, that change is propagated to the caches of other cores, preventing them from using stale, incorrect data.

Cache Mapping & Associativity

To manage what gets stored, CPU caches use strategies:

- Direct-Mapped Cache: Each memory address maps to one cache line. Simple but prone to collisions.

- Fully Associative Cache: Any data can go anywhere in the cache. Flexible but expensive.

- Set-Associative Cache: A balanced approach — data maps to a small group of lines.

Most modern CPUs use set-associative caches (e.g., 8-way, 16-way) for optimal performance.

CPU Cache and Performance: Real-World Impact

More cache generally means better performance, but not always linearly. The benefit depends on:

- Workload type: Games and simulations benefit heavily.

- Cache architecture: Faster latency and smart policies improve gains.

- Core count: Shared L3 cache helps multicore coordination.

Example:

- Intel i5 with 12MB L3 cache may outperform an older i7 with 8MB in certain multitasking scenarios.

- AMD Ryzen CPUs feature large L3 caches (up to 96MB) to boost gaming performance (marketed as “3D V-Cache”).

CPU Cache vs RAM vs Storage

| Feature | CPU Cache | RAM | SSD/HDD |

|---|---|---|---|

| Speed | Nanoseconds | Tens of nanoseconds | Microseconds to milliseconds |

| Location | Inside CPU | On motherboard | External device |

| Size | KB to MB | GBs | Hundreds of GBs or TBs |

| Cost | Very high | Moderate | Low |

| Purpose | Store frequently used instructions/data | Store active programs/data | Store long-term data |

The cache is not a replacement for RAM — it’s a performance booster to reduce latency between CPU and memory.

Frequently asked questions

How to Check CPU Cache Size?

You can check your CPU’s cache in different ways:

- Windows: Task Manager → Performance → CPU tab.

- Linux: Use

lscpucommand. - Online Specs: Search your CPU model (e.g. “Ryzen 7 5800X cache size”).

Does More Cache Always Mean Better Performance?

Not necessarily. It depends on:

- Architecture efficiency

- Workload pattern

- Cache latency

A well-optimized 6MB cache may outperform a poorly implemented 12MB one.

But for gaming, video editing, and data-heavy tasks, larger caches often provide noticeable improvements.

CPU cache design continues to evolve. A significant innovation is 3D stacking, where cache memory is stacked vertically on top of the CPU die. This technique, pioneered by AMD’s 3D V-Cache, dramatically increases cache capacity without increasing the chip’s footprint, leading to substantial performance gains in cache-sensitive applications like gaming. As workloads become more demanding, the role of CPU cache as a key differentiator in CPU performance will only grow more important.

In conclusion, the CPU cache is not just a minor technical detail but a fundamental architecture that enables modern processors to run at astounding speeds. By understanding the distinct roles of L1, L2, and L3 caches, you can make more informed decisions when evaluating computer hardware and better understand the technology that powers your digital life. To see how cache fits into the bigger picture of processor performance, explore our other articles on CPU architecture and how to choose a processor.